-

Amira Abdel-Rahman authoredAmira Abdel-Rahman authored

AI that Grows

Research and development of workflows for the co-design reconfigurable AI software and hardware.

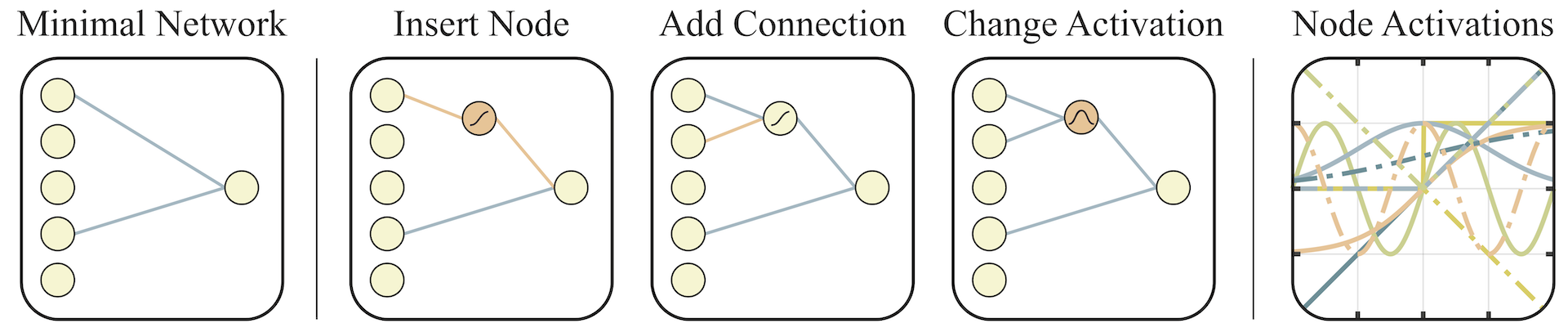

Weight Agnostic Neural Networks (WANN)

Introduction

-

Paper

- "focus on finding minimal architectures".

- "By deemphasizing learning of weight parameters, we encourage the agent instead to develop ever-growing networks that can encode acquired skills based on its interactions with the environment".

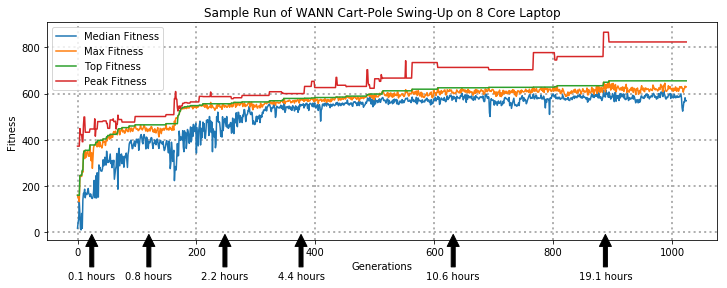

Case Study: Cart-Pole Swing Up

One of the most famous benchmarks of non-linear control, there is lots of approaches including standard q-learning using a discretized state space, deep Q-learning or linear Q-learning with continuos state space.

WANN is interesting as it tries to get the simplest network that uses the input sensors (position, rotation and their derivatives) to the output (force). It focuses on learning principles and not only tune weights.

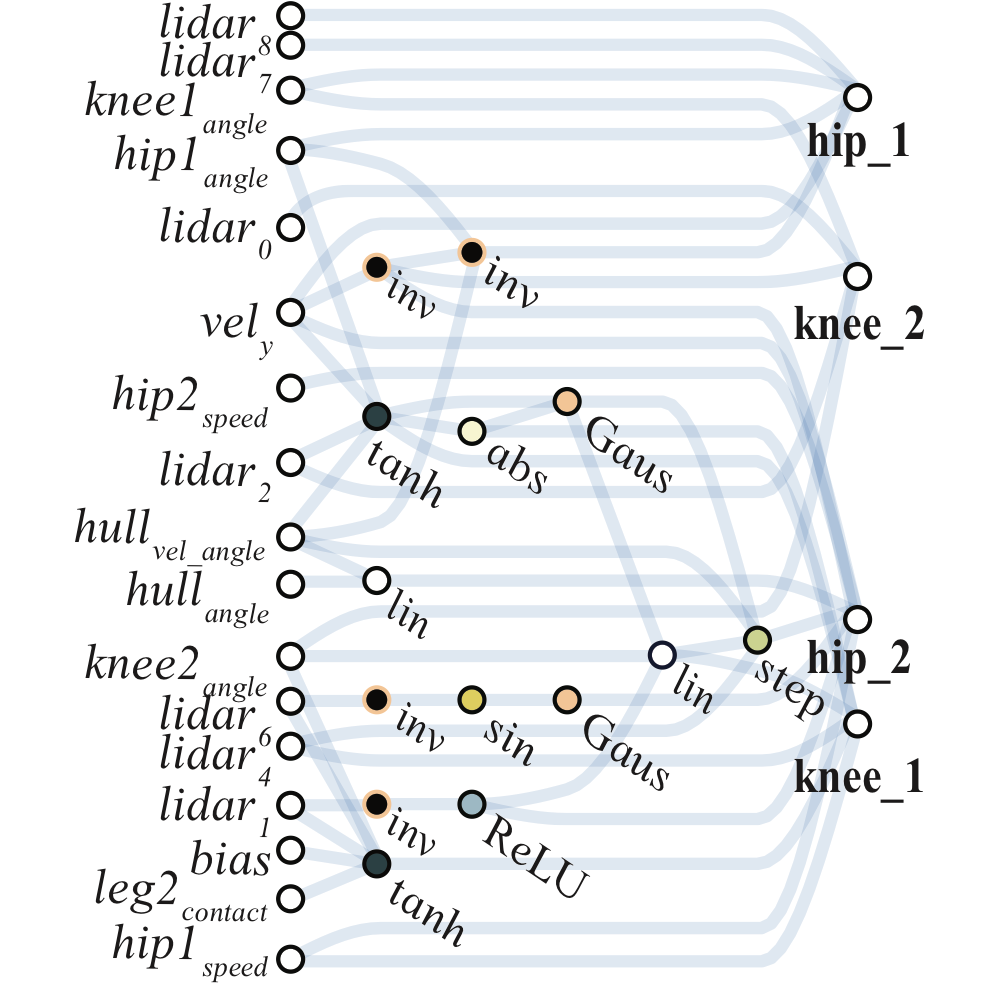

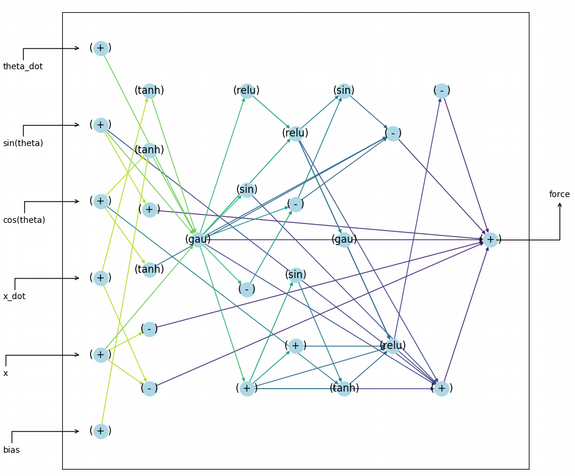

This is one of the outputs of the network and you can see because of it's simplicity it's not a black box and one can deduce the principles learnt 1(https://towardsdatascience.com/weight-agnostic-neural-networks-fce8120ee829):

- the position parameter is almost directly linked to the force, there is only an inverter which means that if the cart is on right or left of the center (+- x), it always try to go to in the opposite direction to the center.

- Based on the weight (shared weight between them all) it learned that one inverter is not enough, so it doubled it.

- It shows that it discovered symmetry: Most of them pass by a gaussian filter which basically gives the same result for -x and x, which means it's agnostic to sign of the input.

Example Implementation: Frep Search

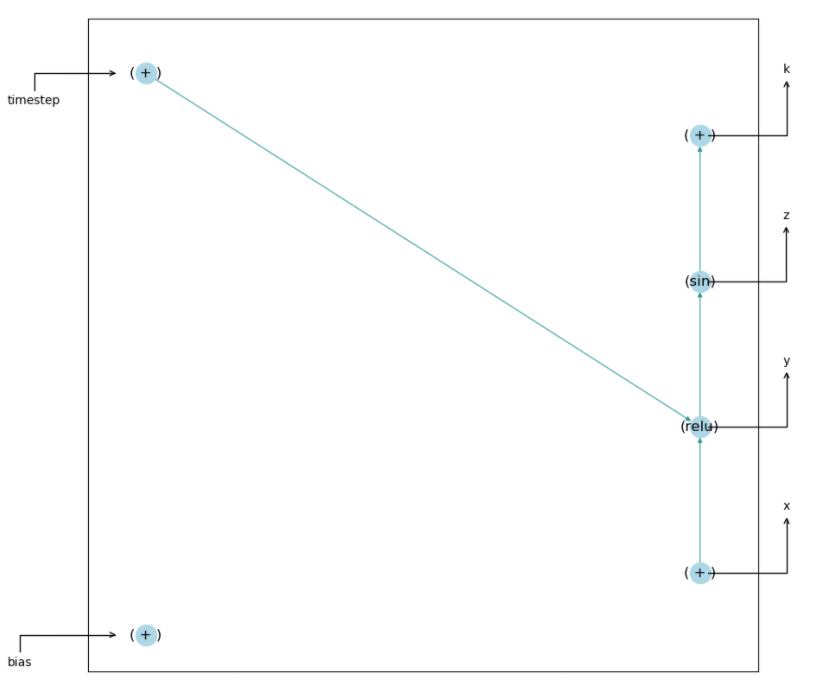

As a first step to understand the code and WANN training, I implemented a toy problem where I am trying to learn the functional representation of target image, which is, given the x,y position of every pixel in an image, try to find the distance function that represent how far is this pixel from the edge of the shape.

In the following training the target shape is a circle, and the input is the x and y positions of the pixels, and it found a minimal neural network architecture (given a library of given non linear functions) that maps the input position into the target shape.

Graph Evolution:

Target Evolution:

Rover and Walker Training

Now that I have a good understanding how to extend and train new WANN models, I started to see what is the best strategy to train rover and walker control.

First, since the MetaVoxel code is in julia and WANN library is in python, I used the pyjulia library in python to efficiently compile and run the simulation from python, and smoothly send information back and forth between them.

Now that this is working, the next step is to properly formulate the problem and define the variables and the target reward function.

1- Rover

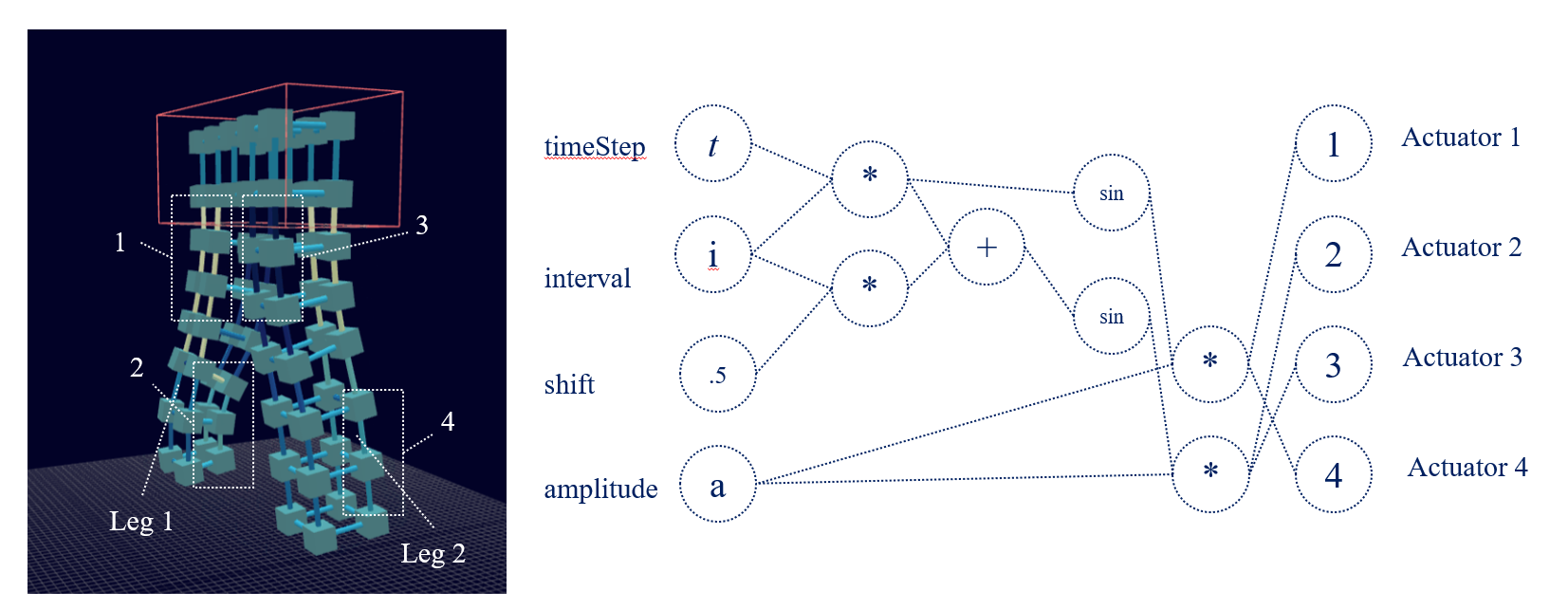

Function Graph, Simulation Demo, Progress

- Variables

- Modularity and Hierarchy

- Number of Variables

- For rover, the robot has four legs, and each leg has three degrees of freedom, so the most direct way of implementing search is to assume there are 12 independent degrees of freedom

- Since we already have kind of a sense that similar robots and animals usually each the two legs that move identically and independently from the other two legs, we can assume that there are 6 independent degrees of freedom

- We can further assume that all legs will move in the same manner, but each two will have have a shift in phase, se we can just reduce the degrees of freedom to 3 and find the best phase shift

- I will explore the three routes and find the speedup and performance in the training between the different strategies

- Time frame

- The most straight forward way is to give the robot a time series (0 => total time) and see what it can do in this time

- However, since I want to the movement to be modular and extendable, I will divide the total time into N time frames and repeat the movement

- this should push the robot to learn cyclic smooth motions

- Number of Variables

- Modularity and Hierarchy

- Objective Function

- for now, I will use the max distance traveled in the x direction

- next I explore steering right and left

- next it will be trying to follow a target

2- Walker

- Variables

- Modularity and Hierarchy

- Number of Variables

- Similar to rover, I can explore the benefit of hierarchy and modularity by taking advantage of the symmetry and hierarchy in the problem

- Each leg has 2 independent degrees of freedom

- Number of Variables

- Modularity and Hierarchy

- Objective function

- this problem will be a bit harder as it has to learn ro stand first then walk

- I can try to make it easier by restraining some degrees of freedom

- I want to explore if the graphs of both behaviors are related or not

Progress and Results:

- Integration:

- Done with integrating WANN with MetaVoxels, only need to load libraries/functions once and then call them each time I do a simulation in parallel

- Training

- Objective function:

- any voxel with maximum x

- Training Results:

-----

-----

DICE Integration

Next Steps:

- Implement WANN for rover

- Beyond WANN

-

Structure Learning

- Information gain using the ensemble method

- Data driven differential equations

-

Structure Learning

- Integrate shape and control search